NetworkPolicy Editor: Create, Visualize, and Share Kubernetes NetworkPolicies

Implementing Network Policy is a critical part of building a secure Kubernetes-based platform, but the learning curve from simple examples to more complex real-world policies is steep. Not only can it be painful to get the YAML syntax and formatting just right, but more importantly, there are many subtleties in the behavior of the network policy specification (e.g. default allow/deny, namespacing, wildcarding, rules combination, etc.). Even an experienced Kubernetes YAML-wrangler can still easily tie their brain in knots working through an advanced network policy use case.

Over the past years, we have learned a lot about the common challenges while working with many of you in the Cilium community implementing Kubernetes Network Policy. Today, we are excited to announce a new free tool for the community to assist you in your journey with Kubernetes NetworkPolicy: editor.cilium.io:

The Kubernetes NetworkPolicy Editor helps you build, visualize, and understand Kubernetes NetworkPolicies.

- Tutorial: Follow the assisted tutorial to go from not using NetworkPolicies yet to a good security posture.

- Interactive Creation: Create policies in an assisted and interactive way.

- Visualize & Update: Upload existing policies to validate and better understand them.

- Security score: Check the security score of polices to understand the level of security they add to your cluster.

- YAML Download: Downloading policies as YAML for enforcement in your cluster with your favorite CNI.

- Sharing: Share policies across teams via GitHub Gists and create links to visualize your own NetworkPolicies.

- Automatic Policy Creation: Upload Hubble flow logs to automatically generate NetworkPolicies based on observed network traffic.

How exactly does editor.cilium.io help?

To make this more concrete, let’s explore five common gotchas we see trip up those working with Network Policy, both newbies and sometimes (gulp!) those of us who have been doing this for a while. At the end of each mistake, you’ll find a link to a short (3-5 minute) tutorial in the tool that walks you through each step required to fix the mistake.

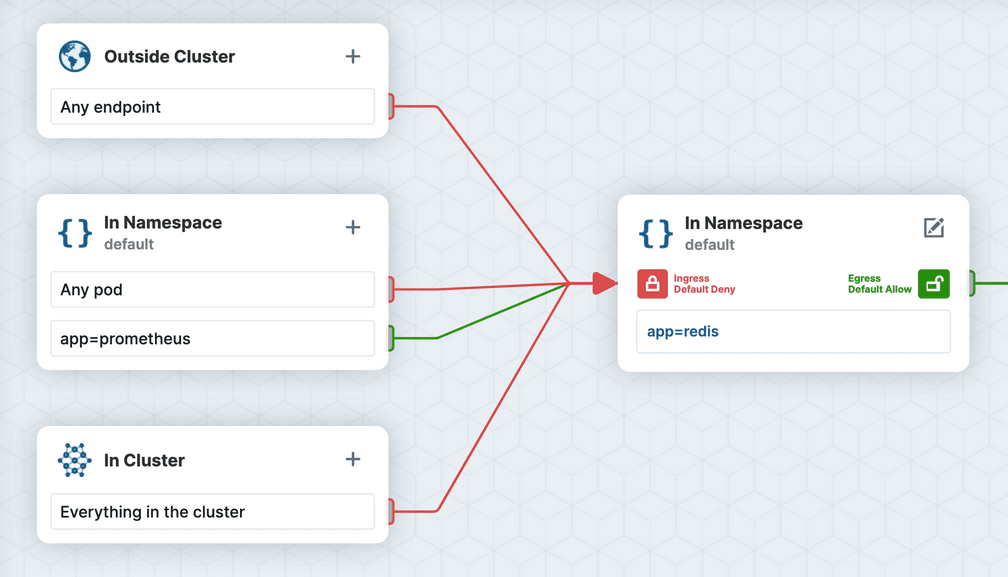

Mistake 1: Not Using a Namespace Selector

Consider a scenario where we want a centralized Prometheus instance running in a monitoring namespace to be able to scrape metrics from a Redis Pod running in the default namespace. Take a look at the following network policy, which is applied in the default namespace. It allows Pods with label app=prometheus to scrape metrics from Pods with label app=redis:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-ingress-from-prometheus

namespace: default

spec:

podSelector:

matchLabels:

app: redis

policyTypes:

- Ingress

ingress:

- from:

- podSelector:

matchLabels:

app: prometheusAs you can see in the editor's visualization, the above network policy will only work if both Pods are in the same namespace. The podSelector is scoped to the policy's namespace unless you explicitly use namespaceSelector to select other namespaces.

How do you do this right?

🤓 Click here to see how to easily visualize and fix this policy in the editor.

Mistake 2: "There is no way it’s DNS..."

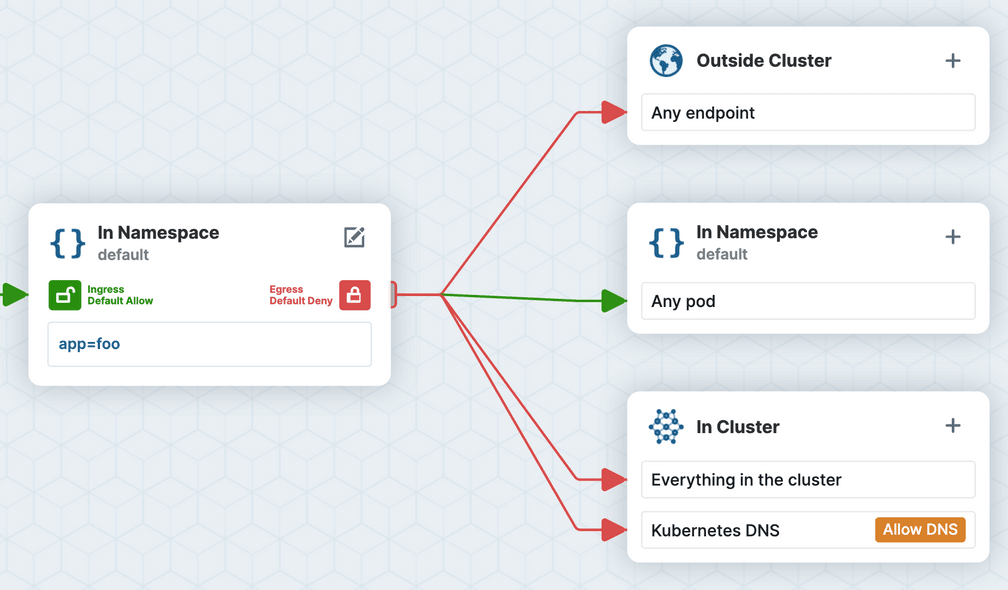

It is common that workloads must be locked down to limit external access (i.e. “egress” default deny). If you want to prevent your application from sending traffic anywhere except to Pods in the same namespace, you might create the following policy:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-kube-dns

namespace: default

spec:

podSelector:

matchLabels:

app: foo

egress:

- to:

- podSelector: {}However, once you deploy this network policy, your application connectivity will likely be broken.

Why?

It’s not DNS

There is no way it’s DNS

It was DNS

Pods will typically reach other Kubernetes services via their DNS name (e.g., service1.tenant-a.svc.cluster.local), and resolving this name requires the Pod to send egress traffic to Pods with labels k8s-app=kube-dns in the kube-system namespace.

So how do you solve this?

🤓 Click here to see and fix the mistake in the editor

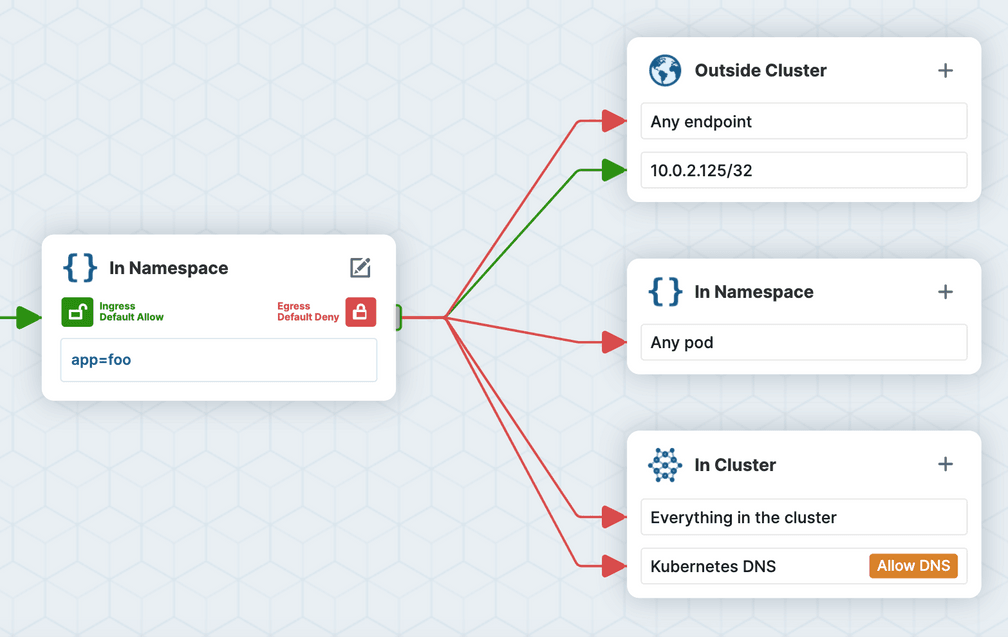

Mistake 3: Using Traditional Networking Constructs

If you come from a traditional Networking background, it might be tempting to use a /32 CIDR rule to allow traffic to the IP address of a Pod as shown in the output of kubectl describe pod. For example:

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: allow-egress-to-pod

spec:

podSelector:

matchLabels:

app: foo

egress:

- to:

- ipBlock:

cidr: 10.0.2.125/32However, Pod IPs are ephemeral and unpredictable, and depending on a network plugin implementation, ipBlock rules might only allow egress traffic to destinations outside of the cluster. Kubernetes documentation recommends using ipBlocks only for IP addresses outside of your cluster. So how do you solve this?

🤓 Click to learn how to allow egress to Pod.

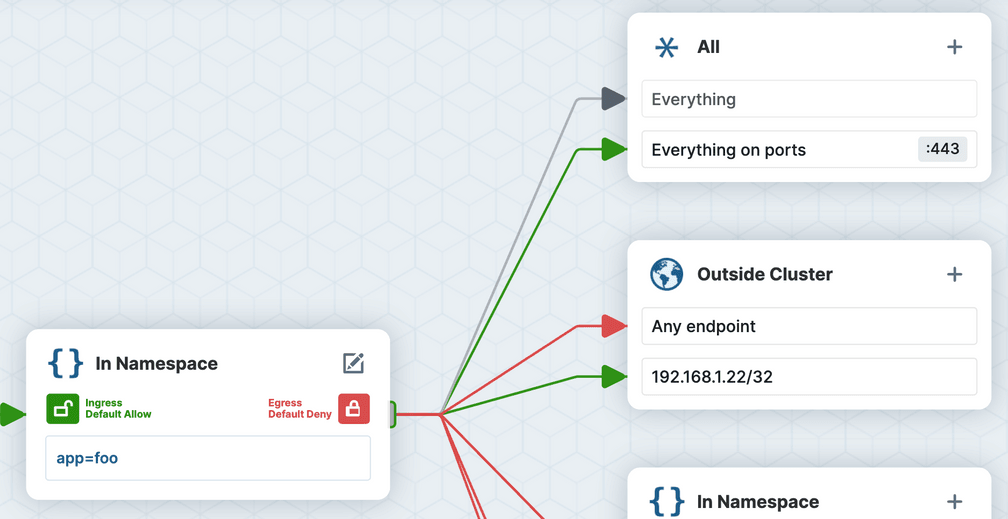

Mistake 4: Misunderstanding How Policy Rules Combine

Let's take a look at another egress policy example, that seeks to allow Pods with label app=foo to establish egress connections to an external VM with IP 192.168.1.22 on port 443.

apiVersion: networking.k8s.io/v1

kind: NetworkPolicy

metadata:

name: egress-to-private-vm-443

spec:

podSelector:

matchLabels:

app: foo

egress:

- to:

- ipBlock:

cidr: 192.168.1.22/32

- ports:

- port: 443Wait... while this is valid YAML and a valid network policy, one extra character in the YAML made a huge difference here, and ended up allowing a lot more connectivity than expected. The additional “-” in front of “ports” meant that this is interpreted as two different rules, one that allows all traffic to the VM IP (on any port) and another that allows all traffic to port 443 (regardless of the destination IP address). The network policy specification dictates that the rules are logically OR'ed (not AND'ed), meaning the Pod workload has significantly more connectivity than intended.

How do you prevent these mistakes?

🤓 Click to inspect the example in the Network Policy Editor

Mistake 5: Confusing Different Uses for “{}”

In Network Policy, empty curly braces (i.e., “{}”) can have a different meaning in different contexts, leading to a lot of confusion. We’ll use this last example as a quiz. What is the difference between these two similar looking network policy rules that both leverage “{}”? Take a guess, then look at each rule in the Network Policy Editor below to see if you were right.

ingress:

- {}ingress:

- from:

- podSelector: {}🤓 Get the answer with the Network Policy Editor

What's Next?

We hope you found these examples useful, and would love to hear from you if you have other examples of common Network Policy “gotchas” or other interesting policies to share with the community. Feel free to try making your own network policies or dropping in existing ones to visualize and check that they do what you want them to do.

To make sharing network policy examples easy, we have added a simple Share button that leverages Github Gist on the backend, enabling you to convert any example you have created into an easily shared link.

Tweet us at @ciliumproject to share your examples, and in the next few weeks we’ll pick a few favorites and send the creators some exclusive Cilium SWAG!

We’d love to hear your feedback and questions on both the editor and Network Policy in the #networkpolicy channel of Cilium Slack. See you there!